Ian Milligan

Associate Vice-President, Research Oversight and Analysis

Professor of History

University of Waterloo

Ian Milligan (he/him) is Associate Vice-President, Research Oversight and Analysis at the University of Waterloo, where he is also professor of history. In this service role within the Office of the Vice-President, Research and International, Milligan provides campus leadership for research oversight and compliance, and is the campus research integrity lead.

Milligan also helps to lead the Safeguarding Research portfolio, oversees the Office of Research Ethics, the Inclusive Research Team, supports research health & safety, serves as a lead on emergency issues related to research (including COVID research response), co-chairs the Waterloo Awards Committee, and helps to coordinate bibliometrics activities. Finally, Milligan co-led the campus-wide Research Data Management strategy and is currently working on its implementation.

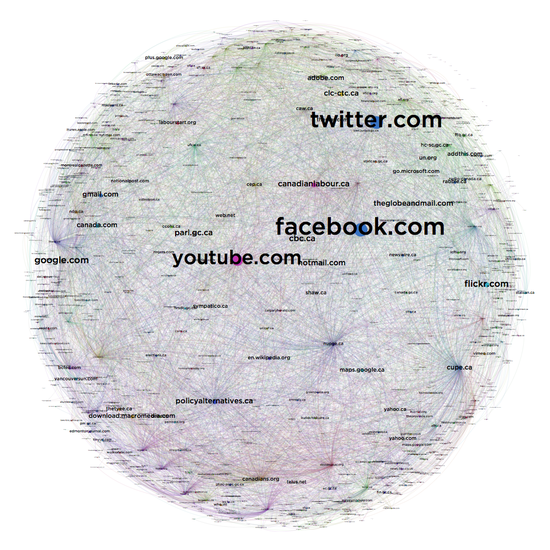

Alongside this service portfolio, Milligan maintains an active research agenda. Milligan’s primary research focus is on how historians can use web archives, as well as the impact of digital sources on historical practice more generally. He is author of three sole-authored books: The Transformation of Historical Research in the Digital Age (2022), History in the Age of Abundance (2019), and Rebel Youth (2014). Milligan also co-authored Exploring Big Historical Data (2015, with Shawn Graham and Scott Weingart) and edited the SAGE Handbook of Web History (2018, with Niels Brügger). Milligan was principal investigator of the Archives Unleashed project between 2017 and 2023 (the project lives on as an Internet Archive service).

In 2016, he was awarded the Canadian Society for Digital Humanities Outstanding Early Career Award and in 2019 he received the Arts Excellence in Research award from the University of Waterloo. In 2020, recognizing his track record of research and advocacy, the Association of Canadian Archivists awarded Milligan the Honourary Archivist Award. He is also a Fellow of the Royal Historical Society.

Milligan is currently co-editor of Internet Histories and was a co-program chair of the ACM/IEEE Joint Conference on Digital Libraries. He has an extensive interdisciplinary service record, sitting on selection committees for multiple granting agencies as well as sitting on the steering committee for the ACM/IEEE Joint Conference on Digital Libraries. At Waterloo, Milligan has served on the University Senate as well as the Board of Governors.

He lives in Waterloo, Ontario, Canada with his partner, son, and daughter.

You can read his full CV here.

Interests

- Web archives and web history

- How technology is changing the historical profession

- Research data management

Education

PhD in History, 2012

York University

MA in History, 2007

York University

BA (Hon) in History, 2006

Queen's University