This work isn’t an exhaustive tutorial by any means, for that I link to the main tutorials. But it gives you a sense of what I did to ingest data like webpages and PDF files into a research database, and to point people to a pretty cool clustering engine. It’s also still at a very basic level: I need to work on fields as I get a better sense of my project evolving, and eventually need to get a better ingesting tool. But this’ll do for now. Good luck!

My work is taking me to larger and larger datasets, so finding relevant information has become a real challenge – I’ve dealt with this before, noting DevonTHINK as an alternative to something slow and cumbersome like OS X’s Spotlight. As datasets scale, keyword searching and n-gram counting has also shown some limitations.

One approach that I’ve been taking is to try to implement a clustering algorithm on my sources, as well as indexing them for easy retrieval. I wanted to give you a quick sense of my workflow in this post.

1. Search Engine

I wanted to have a way to quickly find my material, so I’ve been experimenting with Apache Solr. What is Solr?

Solr (pronounced “solar”) is an open source enterprise search platform from the Apache Lucene project. Its major features include full-text search, hit highlighting, faceted search, dynamic clustering, database integration, and rich document (e.g., Word, PDF) handling. Providing distributed search and index replication, Solr is highly scalable. Solr is the most popular enterprise search engine. [wikipedia]

Sounds perfect for me! Implementing it was relatively easy. The first thing I did, and what I’d recommend, is to follow the official tutorial.

Once the tutorial was completed, I created a second ‘collection’. The original data is all held on the ‘example’ server, located in the example/ directory of your solr installation; I replicated it by just recursively copying it (cp -R example webdata) and changing references to ‘collection1’ to ‘webdata’ in the solr.xml configuration file.

2. Indexing Text Files

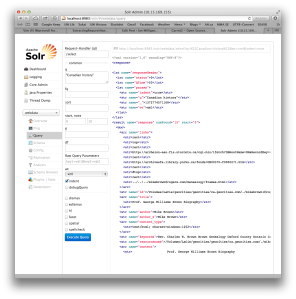

The key to changing the configuration settings to now index HTML, DOC, etc. files is to add functionality via the solrconfig.xml file (something which wasn’t crystal clear to me at first). What I needed to do was to implement Solr’s ExtractingRequestHandler routine. Instructions are here.

For my implementation, the following code went below the RealTimeGetHandler request handler, and above the SearchHandler.

<requestHandler name="/update/extract" class="org.apache.solr.handler.extraction.ExtractingRequestHandler">

<lst name="defaults">

<str name="fmap.Last-Modified">last_modified</str>

<str name="uprefix">ignored_</str>

</lst>

<lst name="date.formats">

<str>yyyy-MM-dd</str>

</lst>

</requestHandler>

With that done, we can now just for starters use the included post.jar routine – from your tutorial – to recursively index an entire hierarchy of sources. This will now ingest the formats handled by Apache Tika (discussed in my last post), detailed here.

So for example, this command incorporated all of the PDFs, HTML files, DOCs, etc. in my archive.

java -Dauto -Drecursive -jar post.jar .

You should now be able to search things.

Note that this data will have to be cleaned up depending on what you want to do, which might require digging into some of the field names, making sure you get the data you want, as otherwise your search results may be too cluttered. But it’s a start!

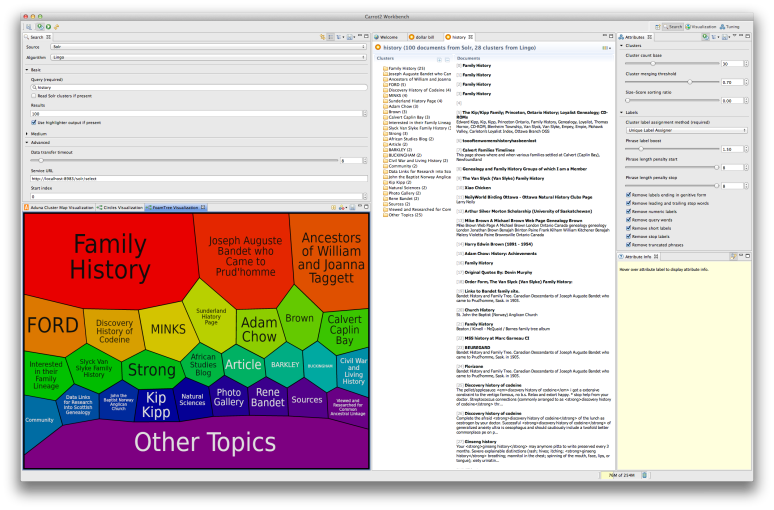

3. Clustering with Carrot2

Carrot2 is shockingly easy to get up and running. You download it here, it’s an app or exe supported on OS X, Linux, or Windows. Now that your data is indexed in Solr, you can just tell Carrot2 to draw on Solr, run some searches, and.. presto: results!

2 thoughts on “Clustering Search with Carrot2”